CIFAR-10 Image Classifier with PyTorch

By William Wong

Prepare the packages we will use.

import time

from typing import List, Dict

import torch

import torch.nn as nn

import torch.optim as optim

import torch.nn.functional as F

import torchvision

import torchvision.models as models

import torchvision.transforms as transforms

import matplotlib.pyplot as pltTrain model on GPU

print(f'Can I can use GPU now? -- {torch.cuda.is_available()}')Can I can use GPU now? -- True

Move model and data to the GPU and back

We need to:

- enable GPU acceleration in Colab,

- put the model on the GPU, and

- put the input data (i.e., the batch of samples) onto the GPU using to() after it is loaded by the data loaders (usually we only put one batch of data on the GPU at a time to fully utilize GPU/CPU when optimal).

rand_tensor = torch.rand(5,2)

simple_model = nn.Sequential(nn.Linear(2,10), nn.ReLU(), nn.Linear(10,1))

print(f'input is on {rand_tensor.device}')

print(f'model parameters are on {[param.device for param in simple_model.parameters()]}')

print(f'output is on {simple_model(rand_tensor).device}')

device = torch.device('cuda' if torch.cuda.is_available() else 'cpu')

# Move rand_tensor and model onto the GPU device

rand_tensor = rand_tensor.to(device)

simple_model = simple_model.to(device)

print(f'input is on {rand_tensor.device}')

print(f'model parameters are on {[param.device for param in simple_model.parameters()]}')

print(f'output is on {simple_model(rand_tensor).device}')input is on cpu model parameters are on [device(type='cpu'), device(type='cpu'), device(type='cpu'), device(type='cpu')] output is on cpu input is on cuda:0 model parameters are on [device(type='cuda', index=0), device(type='cuda', index=0), device(type='cuda', index=0), device(type='cuda', index=0)] output is on cuda:0

Why use a CNN rather than only fully connected layers?

We will build two models for the MNIST dataset: one uses only fully connected layers and another uses a standard CNN layout (convolution layers everywhere except the last layer is fully connected layer). We will use cross entropy loss as my objective function. The two models will be built with roughly the same accuracy performance, but we are comparing the number of network parameters (a huge number of parameters can affect training/testing time, memory requirements, overfitting, etc.).

Prepare train and test function

We will create our train and test procedure in these two functions. The train function should apply one epoch of training. The functions inputs should take everything we need for training and testing and return some logs.

Argument Constraints:

For the train function, it takes the model, loss_fn, optimizer, train_loader, and epoch as arguments.

- model: the classifier, or deep neural network, should be an instance of nn.Module.

- loss_fn: the loss function instance. For example, nn.CrossEntropy(), or nn.L1Loss(), etc.

- optimizer: should be an instance of torch.optim.Optimizer. For example, it could be optim.SGD() or optim.Adam(), etc.

- train_loader: should be an instance of torch.utils.data.DataLoader.

- epoch: the current number of epoch. Only used for log printing.(default: 1.)

For the test function, it takes all the inputs above except for the optimizer (and it takes a test loader instead of a train loader).

Return Constraints

The train function should return a list, which the element is the loss per batch, i.e., one loss value for every batch.

The test function should return a dictionary with three keys: "loss", "accuracy", and "prediction". The values are the average loss of all the testset, average accuracy of all the test dataset, and the prediction of all test dataset.

Other Constraints:

In the train function, the model should be updated in-place, i.e., do not copy the model inside train function.

def train(model: nn.Module,

loss_fn: nn.modules.loss._Loss,

optimizer: torch.optim.Optimizer,

train_loader: torch.utils.data.DataLoader,

epoch: int=0)-> List:

model.train()

train_loss = []

print_freq = len(train_loader) // 10

for batch_idx, (images, targets) in enumerate(train_loader):

optimizer.zero_grad()

outputs = model(images)

loss = loss_fn(outputs, targets)

loss.backward()

optimizer.step()

train_loss.append(loss.item())

if batch_idx % print_freq == 0:

print(f'Epoch {epoch}: [{batch_idx*len(images)}/{len(train_loader.dataset)}] Loss: {loss.item():.3f}')

return train_loss

def test(model: nn.Module,

loss_fn: nn.modules.loss._Loss,

test_loader: torch.utils.data.DataLoader,

epoch: int=0)-> Dict:

model.eval()

test_stat = {"loss": 0.0, "accuracy": 0.0, "prediction": []}

total_num = 0

with torch.no_grad():

for images, targets in test_loader:

outputs = model(images)

loss = loss_fn(outputs, targets)

test_stat["loss"] += loss.item() * images.size(0)

_, predicted = torch.max(outputs, 1)

test_stat["accuracy"] += (predicted == targets).sum().item()

test_stat["prediction"].append(predicted)

total_num += images.size(0)

test_stat["loss"] /= total_num

test_stat["accuracy"] /= total_num

test_stat["prediction"] = torch.cat(test_stat["prediction"])

print(f"Test result on epoch {epoch}: total sample: {total_num}, Avg loss: {test_stat['loss']:.3f}, Acc: {100*test_stat['accuracy']:.3f}%")

return test_statCreating the Two Networks:

One network named OurFC which should consist with only fully connected layers

Another network named OurCNN which applys a standard CNN structure

A standard CNN structure can be composed as [Conv2d, MaxPooling, ReLU] x num_conv_layers + FC x num_fc_layers

Training/Testing Constraints:

We will train and test our network on the MNIST dataset.

# Download MNIST and transformation

transform = transforms.Compose([transforms.ToTensor(), transforms.Normalize((0.1307,), (0.3081,))])

trainset = torchvision.datasets.MNIST(root='./data', train=True, download=True, transform=transform)

trainloader = torch.utils.data.DataLoader(trainset, batch_size=128, shuffle=True)

testset = torchvision.datasets.MNIST(root='./data', train=False, download=True, transform=transform)

testloader = torch.utils.data.DataLoader(testset, batch_size=128, shuffle=False)100%|██████████| 9.91M/9.91M [00:00<00:00, 16.3MB/s] 100%|██████████| 28.9k/28.9k [00:00<00:00, 505kB/s] 100%|██████████| 1.65M/1.65M [00:00<00:00, 3.84MB/s] 100%|██████████| 4.54k/4.54k [00:00<00:00, 7.72MB/s]

# Build OurFC class and OurCNN class.

class OurFC(nn.Module):

def __init__(self):

super(OurFC, self).__init__()

self.flatten = nn.Flatten()

self.fc1 = nn.Linear(28 * 28, 256)

self.relu1 = nn.ReLU()

self.fc2 = nn.Linear(256, 128)

self.relu2 = nn.ReLU()

self.fc3 = nn.Linear(128, 10)

def forward(self, x):

x = self.flatten(x)

x = self.fc1(x)

x = self.relu1(x)

x = self.fc2(x)

x = self.relu2(x)

x = self.fc3(x)

return x

class OurCNN(nn.Module):

def __init__(self):

super(OurCNN, self).__init__()

self.conv1 = nn.Conv2d(1, 16, kernel_size=5)

self.relu1 = nn.ReLU()

self.pool1 = nn.MaxPool2d(kernel_size=2, stride=2)

self.conv2 = nn.Conv2d(16, 32, kernel_size=3)

self.relu2 = nn.ReLU()

self.pool2 = nn.MaxPool2d(kernel_size=2, stride=2)

self.flatten = nn.Flatten()

self.fc1 = nn.Linear(32 * 5 * 5, 128)

self.relu3 = nn.ReLU()

self.fc2 = nn.Linear(128, 10)

def forward(self, x):

x = self.conv1(x)

x = self.relu1(x)

x = self.pool1(x)

x = self.conv2(x)

x = self.relu2(x)

x = self.pool2(x)

x = self.flatten(x)

x = self.fc1(x)

x = self.relu3(x)

x = self.fc2(x)

return x# Let's first train the FC model.

criterion = nn.CrossEntropyLoss()

start = time.time()

max_epoch = 4

def train(model, trainloader, criterion, optimizer, max_epoch):

model.train()

total_params = sum(p.numel() for p in model.parameters() if p.requires_grad)

print(f'Training with {total_params} trainable parameters')

for epoch in range(max_epoch):

running_loss = 0.0

correct = 0

total = 0

for i, data in enumerate(trainloader, 0):

inputs, labels = data

optimizer.zero_grad()

outputs = model(inputs)

loss = criterion(outputs, labels)

loss.backward()

optimizer.step()

running_loss += loss.item()

_, predicted = torch.max(outputs.data, 1)

total += labels.size(0)

correct += (predicted == labels).sum().item()

epoch_loss = running_loss / len(trainloader)

epoch_acc = 100 * correct / total

print(f'Epoch {epoch+1}, Loss: {epoch_loss:.4f}, Train Accuracy: {epoch_acc:.2f}%')

# Evaluate on test set after each epoch

test_acc = test(model, testloader)

print(f'Test Accuracy after Epoch {epoch+1}: {test_acc:.2f}%')

def test(model, testloader):

model.eval()

correct = 0

total = 0

with torch.no_grad():

for data in testloader:

images, labels = data

outputs = model(images)

_, predicted = torch.max(outputs.data, 1)

total += labels.size(0)

correct += (predicted == labels).sum().item()

accuracy = 100 * correct / total

return accuracy

ourfc = OurFC()

optimizer = optim.Adam(ourfc.parameters(), lr=0.001)

train(ourfc, trainloader, criterion, optimizer, max_epoch)

final_test_acc = test(ourfc, testloader)

print(f'Final Accuracy on test set: {final_test_acc:.2f}%')

end = time.time()

print(f'Finished Training after {end-start} s ')Training with 235146 trainable parameters Epoch 1, Loss: 0.2691, Train Accuracy: 92.20% Test Accuracy after Epoch 1: 95.96% Epoch 2, Loss: 0.0998, Train Accuracy: 96.88% Test Accuracy after Epoch 2: 97.00% Epoch 3, Loss: 0.0664, Train Accuracy: 97.92% Test Accuracy after Epoch 3: 97.48% Epoch 4, Loss: 0.0503, Train Accuracy: 98.45% Test Accuracy after Epoch 4: 97.33% Final Accuracy on test set: 97.33% Finished Training after 63.559226512908936 s

# Let's then train the OurCNN model.

start = time.time()

ourcnn = OurCNN()

optimizer = optim.Adam(ourcnn.parameters(), lr=0.001)

train(ourcnn, trainloader, criterion, optimizer, max_epoch)

test(ourcnn, testloader)

end = time.time()

print(f'Finished Training after {end-start} s ')Training with 108874 trainable parameters Epoch 1, Loss: 0.2030, Train Accuracy: 94.21% Test Accuracy after Epoch 1: 98.05% Epoch 2, Loss: 0.0564, Train Accuracy: 98.23% Test Accuracy after Epoch 2: 98.71% Epoch 3, Loss: 0.0404, Train Accuracy: 98.77% Test Accuracy after Epoch 3: 99.03% Epoch 4, Loss: 0.0319, Train Accuracy: 98.97% Test Accuracy after Epoch 4: 98.83% Finished Training after 125.24779844284058 s

ourfc = OurFC()

total_params = sum(p.numel() for p in ourfc.parameters())

print(f'OurFC has a total of {total_params} parameters')

ourcnn = OurCNN()

total_params = sum(p.numel() for p in ourcnn.parameters())

print(f'OurCNN has a total of {total_params} parameters')OurFC has a total of 235146 parameters OurCNN has a total of 108874 parameters

Questions: Which one has more parameters? Which one is likely to have less computational cost when deployed? Which one took longer to train?

Answers: Our fully connected layer network has more parameters and will be less computationally expensive when deployed. The reason comes from the nature of the dataset we used. The fully connected layer network does static matrix multiplications by layer, however, the CNN network uses convolutional layers with small filters (5x5, 3x3, etc) that slide over an image and does matrix multiplication at each position. The MNIST dataset contains 28x28 (784 pixels) images. Having small input images favors the FC network over the CNN network because we do one giant matrix multiplication over many smaller ones.

We can easily spot where this becomes an issue. The vast majority of images are way bigger than 28x28. If you google anything and go to images, I can assure you each image will be greater than 1000x1000 pixels.

Train classifier on CIFAR-10 data.

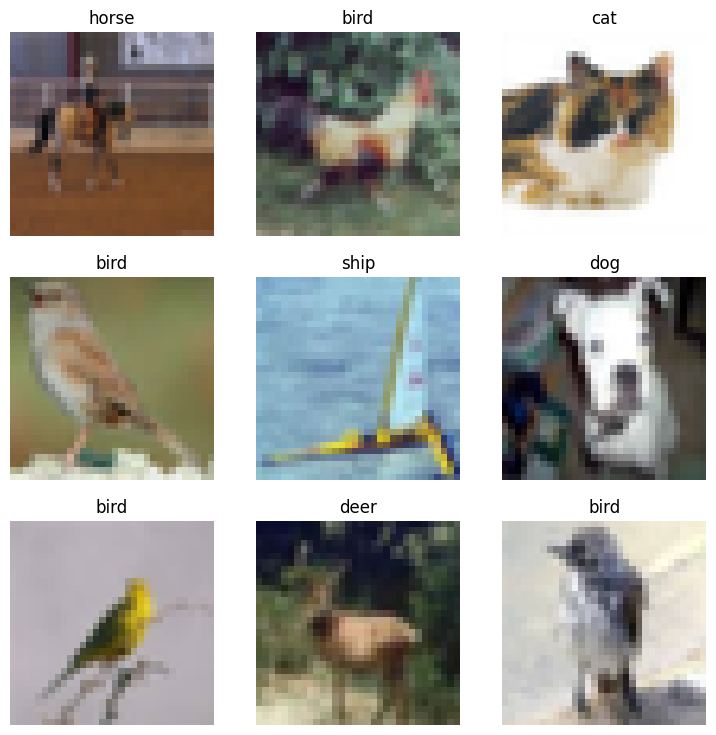

Now, lets move our dataset to color images. CIFAR-10 dataset is another widely used dataset. Here all images have colors, i.e each image has 3 color channels instead of only one channel in MNIST.

Create data loaders

Load CIFAR10 train and test datas with appropriate composite transform where the normalize transform should be transforms.Normalize((0.5, 0.5, 0.5), (0.5, 0.5, 0.5))]).

The code below will plot a 3 x 3 subplot of images including their labels.

classes = ('plane', 'car', 'bird', 'cat', 'deer', 'dog', 'frog', 'horse', 'ship', 'truck')

# Create the transform, load/download CIFAR10 train and test datasets with transform

transform = transforms.Compose([transforms.ToTensor(), transforms.Normalize((0.5, 0.5, 0.5), (0.5, 0.5, 0.5))])

# Define trainloader and testloader

trainset = torchvision.datasets.CIFAR10(root='./data', train=True, download=True, transform=transform)

train_loader = torch.utils.data.DataLoader(trainset, batch_size=9, shuffle=True, num_workers=2)

testset = torchvision.datasets.CIFAR10(root='./data', train=False, download=True, transform=transform)

test_loader = torch.utils.data.DataLoader(testset, batch_size=9, shuffle=False, num_workers=2)

# Code to display images

batch_idx, (images, targets) = next(enumerate(train_loader))

fig, ax = plt.subplots(3,3,figsize = (9,9))

for i in range(3):

for j in range(3):

image = images[i*3+j].permute(1,2,0)

image = image/2 + 0.5

ax[i,j].imshow(image)

ax[i,j].set_axis_off()

ax[i,j].set_title(f'{classes[targets[i*3+j]]}')

fig.show()

Create CNN and train it

# Create CNN network.

class CNN(nn.Module):

def __init__(self):

super(CNN, self).__init__()

self.conv1 = nn.Conv2d(3, 16, kernel_size=3, padding=1)

self.relu1 = nn.ReLU()

self.pool1 = nn.MaxPool2d(kernel_size=2, stride=2)

self.conv2 = nn.Conv2d(16, 32, kernel_size=3, padding=1)

self.relu2 = nn.ReLU()

self.pool2 = nn.MaxPool2d(kernel_size=2, stride=2)

self.flatten = nn.Flatten()

self.fc1 = nn.Linear(32 * 8 * 8, 512)

self.relu3 = nn.ReLU()

self.fc2 = nn.Linear(512, 128)

self.relu4 = nn.ReLU()

self.fc3 = nn.Linear(128, 10)

def forward(self, x):

x = self.conv1(x)

x = self.relu1(x)

x = self.pool1(x)

x = self.conv2(x)

x = self.relu2(x)

x = self.pool2(x)

x = self.flatten(x)

x = self.fc1(x)

x = self.relu3(x)

x = self.fc2(x)

x = self.relu4(x)

x = self.fc3(x)

return x# Train neural network.

start = time.time()

max_epoch = 4

def train(net, train_loader, criterion, optimizer, epoch):

net.train()

running_loss = 0.0

correct = 0

total = 0

for i, data in enumerate(train_loader, 0):

inputs, labels = data

optimizer.zero_grad()

outputs = net(inputs)

loss = criterion(outputs, labels)

loss.backward()

optimizer.step()

running_loss += loss.item()

_, predicted = torch.max(outputs.data, 1)

total += labels.size(0)

correct += (predicted == labels).sum().item()

print(f'Epoch {epoch}, Loss: {running_loss/len(train_loader):.4f}, '

f'Train Accuracy: {100 * correct / total:.2f}%')

def test(net, criterion, test_loader, epoch):

net.eval()

correct = 0

total = 0

test_loss = 0.0

predictions = []

with torch.no_grad():

for data in test_loader:

images, labels = data

outputs = net(images)

loss = criterion(outputs, labels)

test_loss += loss.item()

_, predicted = torch.max(outputs.data, 1)

total += labels.size(0)

correct += (predicted == labels).sum().item()

predictions.extend(predicted.tolist())

accuracy = 100 * correct / total

print(f'Epoch {epoch}, Test Loss: {test_loss/len(test_loader):.4f}, '

f'Test Accuracy: {accuracy:.2f}%')

return {'prediction': predictions, 'accuracy': accuracy}

net = CNN()

criterion = nn.CrossEntropyLoss()

optimizer = optim.Adam(net.parameters(), lr=0.001)

for epoch in range(1, max_epoch + 1):

train(net, train_loader, criterion, optimizer, epoch)

test(net, criterion, test_loader, epoch)

output = test(net, criterion, test_loader, epoch)

end = time.time()

print(f'Finished Training after {end-start} s ')Epoch 1, Loss: 1.3092, Train Accuracy: 52.73% Epoch 1, Test Loss: 1EXIT_CODE0580, Test Accuracy: 62.46% Epoch 2, Loss: 0.9422, Train Accuracy: 66.76% Epoch 2, Test Loss: 0.9187, Test Accuracy: 67.73% Epoch 3, Loss: 0.7792, Train Accuracy: 72.77% Epoch 3, Test Loss: 0.8815, Test Accuracy: 69.92% Epoch 4, Loss: 0.6457, Train Accuracy: 77.32% Epoch 4, Test Loss: 0.8928, Test Accuracy: 70.78% Epoch 4, Test Loss: 0.8928, Test Accuracy: 70.78% Finished Training after 610.1982986927032 s

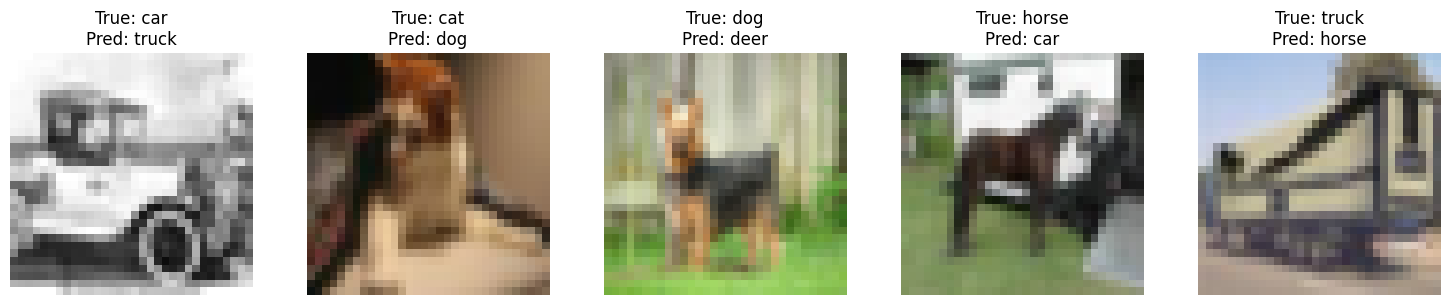

Plot misclassified test images

Plot some misclassified images in the test dataset:

- We selected five images that are misclassified for class_id in 1,3,5,7,9 by our neural network, one image each (i.e., the true label is class_id but the predicted label is not class_id).

- We will label each images with true label and predicted label

total_images = 5

predictions = output['prediction']

targets = torch.tensor(testset.targets)

misclassified = {1: None, 3: None, 5: None, 7: None, 9: None}

class_indices = {1: 'car', 3: 'cat', 5: 'dog', 7: 'horse', 9: 'truck'}

# Find misclassified images

print("Searching for misclassified images...")

for idx, (pred, true) in enumerate(zip(predictions, targets)):

if true.item() in misclassified and pred != true.item() and misclassified[true.item()] is None:

misclassified[true.item()] = (idx, pred, true.item())

print(f"Found misclassified image for class {class_indices[true.item()]}: "

f"Index={idx}, Predicted={classes[pred]}, True={class_indices[true.item()]}")

# Check if all classes have misclassified images

missing_classes = [class_id for class_id, value in misclassified.items() if value is None]

if missing_classes:

print(f"Warning: Could not find misclassified images for classes: "

f"{[class_indices[c] for c in missing_classes]}")

# Plot misclassified images

fig, ax = plt.subplots(1, 5, figsize=(15, 3))

for i, class_id in enumerate([1, 3, 5, 7, 9]):

if misclassified[class_id] is not None:

idx, pred_label, true_label = misclassified[class_id]

image, _ = testset[idx]

image = image.permute(1, 2, 0).detach().cpu()

image = image / 2 + 0.5 # Denormalize

if image.min() < 0 or image.max() > 1:

print(f"Warning: Image at index {idx} has invalid range: min={image.min()}, max={image.max()}")

image = torch.clamp(image, 0, 1)

ax[i].imshow(image)

ax[i].set_title(f'True: {class_indices[true_label]}

Pred: {classes[pred_label]}')

ax[i].set_axis_off()

else:

print(f"No misclassified image found for class {class_indices[class_id]}")

ax[i].set_axis_off()

ax[i].set_title(f'No misclassified

{class_indices[class_id]}')

plt.tight_layout()

plt.show()

Question: Are the mis-classified images also misleading to human eyes?

Answer: Yes, some of these mis-classified images could also be misleading to human eyes like the car and dog, but some are very obvious like the cat and horse.

Transfer Learning

In practice, people won't train an entire CNN from scratch, because it is relatively rare to have a dataset of sufficient size (or sufficient computational power). Instead, it is common to pretrain a CNN on a very large dataset and then use the CNN either as an initialization or a fixed feature extractor for the task of interest.

Load pretrained model

torchvision.models contains definitions of models for addressing different tasks, including: image classification, pixelwise semantic segmentation, object detection, instance segmentation, person keypoint detection and video classification.

First, we will load the pretrained ResNet-18 that has already been trained on ImageNet using torchvision.models. If you are interested in more details about Resnet-18, read this paper https://arxiv.org/pdf/1512.03385.pdf.

resnet18 = models.resnet18(pretrained=True)

resnet18 = resnet18.to(device)Create data loaders for CIFAR-10

Then we will need to create a modified dataset and dataloader for CIFAR-10. Importantly, the model we load has been trained on ImageNet and it expects inputs as mini-batches of 3-channel RGB images of shape (3 x H x W), where H and W are expected to be at least 224. So we need to preprocess the CIFAR-10 data to make sure it has a height and width of 224. Thus, we should add a transform when loading the CIFAR10 dataset (see torchvision.transforms.Resize).

# Create dataloader here

transform = transforms.Compose([

transforms.Resize((224, 224)),

transforms.ToTensor(),

transforms.Normalize((0.5, 0.5, 0.5), (0.5, 0.5, 0.5))

])

trainset = torchvision.datasets.CIFAR10(root='./data', train=True, download=True, transform=transform)

train_loader = torch.utils.data.DataLoader(trainset, batch_size=64, shuffle=True, num_workers=2)

testset = torchvision.datasets.CIFAR10(root='./data', train=False, download=True, transform=transform)

test_loader = torch.utils.data.DataLoader(testset, batch_size=64, shuffle=False, num_workers=2)Classify test data on pretrained model

def calculate_accuracy(model, data_loader):

model.eval()

correct = 0

total = 0

with torch.no_grad():

for images, labels in data_loader:

images = images.to(device)

labels = labels.to(device)

outputs = model(images)

_, predicted = torch.max(outputs, 1)

total += labels.size(0)

correct += (predicted == labels).sum().item()

return correct / total

test_accuracy = calculate_accuracy(resnet18, test_loader)

print(f'Test Accuracy: {100 * test_accuracy:.2f}%')Test Accuracy: 0.03%

Fine-tune (i.e., update) the pretrained model for CIFAR-10

Now we will try to improve the test accuracy.

(1) We will try to directly continue to train the model we load with the CIFAR-10 training data.

And

(2) For efficiency, we will try to freeze part of the parameters of the loaded models. For example, we can first freeze all parameters by

for param in model.parameters(): param.requires_grad = False

and then unfreeze the last few layers by setting somelayer.requires_grad=True.

def test(model, criterion, data_loader, epoch=None):

model.eval()

correct = 0

total = 0

test_loss = 0.0

with torch.no_grad():

for images, labels in data_loader:

images, labels = images.to(device), labels.to(device)

outputs = model(images)

loss = criterion(outputs, labels)

test_loss += loss.item()

_, predicted = torch.max(outputs, 1)

total += labels.size(0)

correct += (predicted == labels).sum().item()

accuracy = 100 * correct / total

if epoch is not None:

print(f'Epoch {epoch}, Test Loss: {test_loss/len(data_loader):.4f}, Test Accuracy: {accuracy:.2f}%')

else:

print(f'Test Loss: {test_loss/len(data_loader):.4f}, Test Accuracy: {accuracy:.2f}%')

return accuracy

# Define train function

def train(model, train_loader, criterion, optimizer, epoch):

model.train()

running_loss = 0.0

correct = 0

total = 0

for images, labels in train_loader:

images, labels = images.to(device), labels.to(device)

optimizer.zero_grad()

outputs = model(images)

loss = criterion(outputs, labels)

loss.backward()

optimizer.step()

running_loss += loss.item()

_, predicted = torch.max(outputs, 1)

total += labels.size(0)

correct += (predicted == labels).sum().item()

print(f'Epoch {epoch}, Train Loss: {running_loss/len(data_loader):.4f}, '

f'Train Accuracy: {100 * correct / total:.2f}%')

# Directly train the whole model.

start = time.time()

# Modify the final layer to match CIFAR-10's 10 classes

resnet18.fc = nn.Linear(resnet18.fc.in_features, 10).to(device)

criterion = nn.CrossEntropyLoss()

optimizer = optim.Adam(resnet18.parameters(), lr=0.001)

max_epoch = 5

for epoch in range(1, max_epoch + 1):

train(resnet18, train_loader, criterion, optimizer, epoch)

test(resnet18, criterion, test_loader, epoch)

test(resnet18, criterion, test_loader)

end = time.time()

print(f'Finished Training after {end-start} s ')Epoch 1, Train Loss: 0.5695, Train Accuracy: 80.51% Epoch 1, Test Loss: 0.5833, Test Accuracy: 80.71% Epoch 2, Train Loss: 0.3257, Train Accuracy: 88.89% Epoch 2, Test Loss: 0.3391, Test Accuracy: 88.25% Epoch 3, Train Loss: 0.2243, Train Accuracy: 92.39% Epoch 3, Test Loss: 0.3271, Test Accuracy: 89.59% Epoch 4, Train Loss: 0.1617, Train Accuracy: 94.33% Epoch 4, Test Loss: 0.3818, Test Accuracy: 87.79% Epoch 5, Train Loss: 0.1247, Train Accuracy: 95.81% Epoch 5, Test Loss: 0.4309, Test Accuracy: 88.01% Test Loss: 0.4309, Test Accuracy: 88.01% Finished Training after 881.209331035614 s

# Load another resnet18 instance, only unfreeze the outer layers.

resnet18 = models.resnet18(pretrained=True).to(device)

# Freeze all parameters

for param in resnet18.parameters():

param.requires_grad = False

# Replace and unfreeze the final fully connected layer

resnet18.fc = nn.Linear(resnet18.fc.in_features, 10).to(device)

for param in resnet18.fc.parameters():

param.requires_grad = True

# Unfreeze the last convolutional layer for better adaptation

for param in resnet18.layer4.parameters():

param.requires_grad = True# Train the model!

start = time.time()

criterion = nn.CrossEntropyLoss()

optimizer = optim.Adam(filter(lambda p: p.requires_grad, resnet18.parameters()), lr=0.001)

max_epoch = 5

for epoch in range(1, max_epoch + 1):

train(resnet18, train_loader, criterion, optimizer, epoch)

test(resnet18, criterion, test_loader, epoch)

test(resnet18, criterion, test_loader)

end = time.time()

print(f'Finished Training after {end-start} s ')Epoch 1, Train Loss: 0.4442, Train Accuracy: 84.85% Epoch 1, Test Loss: 0.3553, Test Accuracy: 87.99% Epoch 2, Train Loss: 0.2161, Train Accuracy: 92.51% Epoch 2, Test Loss: 0.3173, Test Accuracy: 89.75% Epoch 3, Train Loss: 0.1187, Train Accuracy: 95.81% Epoch 3, Test Loss: 0.3639, Test Accuracy: 89.11% Epoch 4, Train Loss: 0.0812, Train Accuracy: 97.19% Epoch 4, Test Loss: 0.3924, Test Accuracy: 89.88% Epoch 5, Train Loss: 0.0557, Train Accuracy: 98.07% Epoch 5, Test Loss: 0.3968, Test Accuracy: 90.09% Test Loss: 0.3968, Test Accuracy: 90.09% Finished Training after 566.8508851528168 s